Generative AI isn’t just a wave it’s a tidal shift. It can write poetry, summarise legal opinions, triage patient symptoms, and even spark new product ideas. But just beneath the surface of this creative renaissance lies a vulnerable architecture one that can be manipulated, misused, or misunderstood.

The question isn’t whether generative AI is powerful. The question is: are we securing that power wisely?

Let’s explore how to secure generative AI through real-world risks, structured thinking, and a risk-driven approach designed to prioritize what matters most.

Hallucinations: When AI Dreams Too Wildly

Ever asked an AI to draft a contract and got back terms that don’t exist? Or a research paper riddled with fake citations? These aren’t glitches they’re hallucinations. Large language models don’t “know” facts. They predict the most probable sequence of words based on patterns.

When used in high-stakes industries like healthcare, finance, or law, hallucinations can become compliance nightmares or public embarrassments.

Securing against this risk begins with implementing output validation pipelines, reinforcing trusted data sources, and deploying moderation filters that flag or block nonsensical or risky outputs. Creativity is welcome but not at the cost of credibility.

Bias Baked In: When AI Mirrors Our Flaws

Bias isn’t always loud or obvious. Sometimes, it’s buried in the datasets. A resume tool trained on ten years of hiring data may unknowingly prefer certain names or educational backgrounds. An AI support agent might respond differently to queries based on inferred ethnicity or gender.

When training data reflects societal inequities, models amplify them at scale. The result? Automated discrimination.

Mitigating bias begins with conscious curation of training sets, cross-demographic testing of AI outputs, and bias-scanning mechanisms that analyze decision paths. And because fairness isn’t a checkbox it’s a moving target this process must be continuous, deliberate, and deeply rooted in design.

Data Leaks Without a Breach: The Privacy Paradox

Here’s a scary thought: your AI might remember what it should forget.

Imagine a chatbot trained on internal support tickets accidentally revealing personal health info in a product demo. Or an AI model surfacing customer payment details because it saw them in logs.

Generative AI can unintentionally regurgitate sensitive training data even when those disclosures weren’t explicitly prompted. This raises serious questions around compliance with privacy laws like GDPR, HIPAA, and India’s DPDP Act.

To address this, organizations must minimize exposure by sanitizing training inputs, use privacy-preserving techniques (like synthetic data or federated learning), and ensure all data processing aligns with consent and purpose principles. Because an AI with a photographic memory needs a very strict diary.

Poisoned Prompts and Weaponised Outputs

Not all prompts are benign. Some are malicious by design.

A prompt injection attack can trick a model into revealing internal logic, bypassing content restrictions, or even executing unintended actions. Think of it as social engineering for machines.

On the other side, unfiltered outputs can cause harm from generating hate speech to recommending unsafe medical advice.

That’s why securing generative AI requires dual vigilance: vetting what goes in and validating what comes out. That includes context-aware sanitization, dynamic prompt throttling, and security gates that monitor how outputs evolve in response to adversarial language.

Invisible Gateways: Who’s Talking to Your AI?

In the rush to integrate AI into chatbots, workflows, and third-party tools, many forget a basic principle of cybersecurity: Not everyone should have the keys.

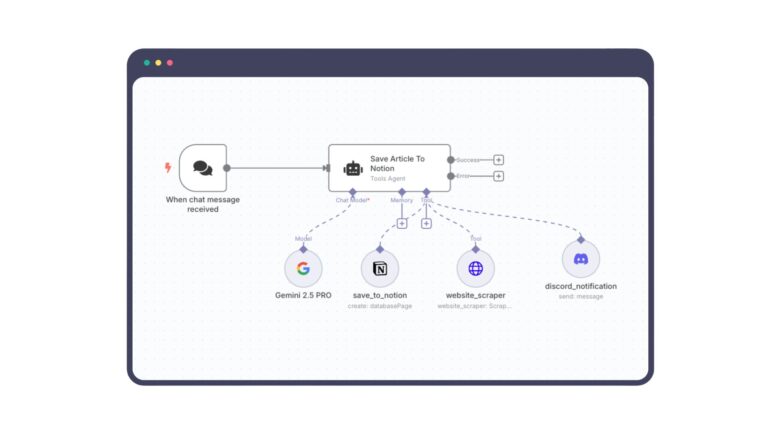

Left unchecked, AI interfaces can become soft targets for data exfiltration, escalation of privilege, or backend abuse especially when plugin ecosystems expand access without rigorous permission controls.

The fix? Enforce strong identity verification. Apply strict role-based access controls. Isolate AI systems from critical infrastructure. Treat AI endpoints like you would a high-value asset, not a casual API.

Securing AI Requires More Than Firewalls. It Demands Foresight.

To protect generative AI at scale, organizations must move from reactive patches to proactive design. That begins with a three-step approach: identify risks, quantify them, and prioritize mitigation based on what the business can’t afford to lose.

1. Identify Risks Thoughtfully

Map your AI’s exposure across:

- Model behavior (hallucinations, bias)

- Data sensitivity (privacy, leaks)

- Operational entry points (inputs, APIs, plugins)

- Compliance exposure (industry regulations, reputational risks)

Think of this like creating a threat map only it’s not the perimeter you’re guarding, it’s the mind of your AI.

2. Quantify the Damage Before It Happens

Each risk should be assessed across five vectors:

- Likelihood – How probable is the risk?

- Impact – What’s the worst-case outcome?

- Detectability – How easily can we spot it?

- Recoverability – Can we reverse it, and at what cost?

- Velocity – How fast can it spiral out of control?

This isn’t just about labeling risks, it’s about ranking them by urgency.

3. Prioritize What Truly Matters

Not all risks deserve the same attention. Evaluate each one based on:

- Business Criticality – Does this AI output drive revenue or operations?

- Strategic Alignment – Does securing this risk support long-term digital goals?

- Residual Risk – What’s left vulnerable even after controls?

- Implementation Effort – Can this be mitigated quickly or does it require architectural redesign?

This balance ensures your team tackles high-impact, low-effort wins first while planning for deeper challenges long term.

Final Word: Security Isn’t a Brake. It’s a Compass.

In the world of generative AI, innovation is moving fast but trust moves slower.

Securing AI isn’t about stalling progress. It’s about making sure the systems we build don’t unintentionally hurt the very people they’re meant to serve, balancing the cost of controls with the value of protection, and letting businesses determine what risks are tolerable versus what must be mitigated at all costs.

Some risks only need simple safeguards while others demand robust, layered defenses. The smart move isn’t to chase perfection but it’s to align your security investments with your business priorities and risk tolerance.

So whether you’re a startup deploying your first AI model or an enterprise embedding LLMs into core operations remember this: You’re not just shaping what the model outputs, You’re shaping how the world will experience it.