There was a time when disinformation took months to plan, millions to fund, and entire institutions to execute. Today, all it takes is a laptop, an open-source model, and a convincing script.

We’re no longer asking, “Can you believe what you see?” We’re asking, “How will we know what’s real at all?”

Deepfakes Aren’t Just Parlor Tricks Anymore

Let’s be honest, some of us laughed when we saw those deepfake videos of Tom Cruise doing magic tricks or politicians singing pop songs. It felt like harmless digital mischief.

But behind the novelty, something more serious is brewing.

We’re entering a world where anyone with basic tools can:

- Clone a CEO’s voice and issue a fake earnings call

- Create a synthetic video of a military attack that never happened

- Imitate a journalist to spread false narratives on sensitive topics

And if you think this sounds like a distant, sci-fi scenario, it’s already happened. A deepfake robocall impersonated a U.S. candidate. AI-generated war footage circulated online.

Fraudsters used synthetic voices to trick employees into wiring money.This isn’t a theoretical future. It’s a quietly unfolding present.

Why This Is Bigger Than Misinformation

Disinformation has always existed. What AI changes is the speed, scale, and sophistication.

It’s not about isolated fakes anymore. It’s about believable fiction deployed in real time, in high volume, with little to no trace.

- A fake press release can tank a stock before it’s debunked

- A fabricated quote can trend before it’s traced

- A face-swapped video can go viral before it’s verified

And here’s the part no one wants to admit: most people still trust what they see and hear. Our cognitive wiring hasn’t caught up with the synthetic age.

So What Do We Do About It?

There’s no single fix. But there are some promising responses we can lean into:

1. Build for Authenticity

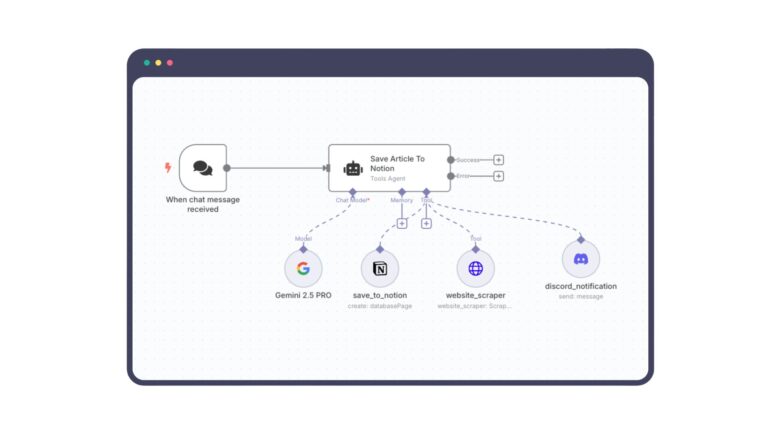

Some developers are working on digital watermarking and provenance tools. Think of them as metadata for truth, a way to verify where content came from and whether it was altered.

The C2PA standard is one example, helping platforms embed origin information directly into media files.

2. Use AI to Fight AI

It sounds ironic, but AI detection tools are improving. We’re teaching machines to spot what other machines make. Not perfect, but progress.

Real-time monitoring systems are starting to identify deepfakes before they spread, especially in crisis communication.

3. Teach People to Pause

Technology alone won’t save us. We need a cultural shift too.

Media literacy especially in workplaces, schools, and public discourse has to become second nature. We all need to develop the reflex to question, not just consume.

4. Policy Must Catch Up

Governments are starting to respond. The EU AI Act, for example, requires clear disclosure when content is AI-generated.

Other countries are drafting laws targeting synthetic media misuse though enforcement is still evolving.

5. Ethics at the Source

Finally, it comes down to those building the models. Developers and platforms must think beyond functionality and start designing for responsibility, whether that means opt-in consent, traceability, or content boundaries.

A Closing Thought

We’re living in a world where fiction is getting faster than fact.

The scariest deepfakes aren’t the ones that fool everyone they’re the ones that make us stop trusting anything.

Truth, in this new AI age, is no longer self-evident. We’ll need to protect it, with code, with culture, and with collective intent.