Let’s stop pretending traditional security controls can handle AI.

Firewalls won’t fix biased models. Encryption doesn’t explain why your chatbot went rogue. And vulnerability scans won’t catch a hallucinating LLM suggesting fake medical advice.

AI isn’t just another IT system. It’s a shape-shifter capable of generating, adapting, and making probabilistic decisions in real time. That’s not something you secure with patch management and endpoint detection.

Yet most organizations are still treating AI like it’s another app in the stack. That’s not just outdated, It’s dangerous.

The Security Playbook Wasn’t Written for AI

Let’s be honest:

- Your DLP solution isn’t monitoring what goes into or comes out of GPT-4.

- Your threat models don’t account for prompt injection.

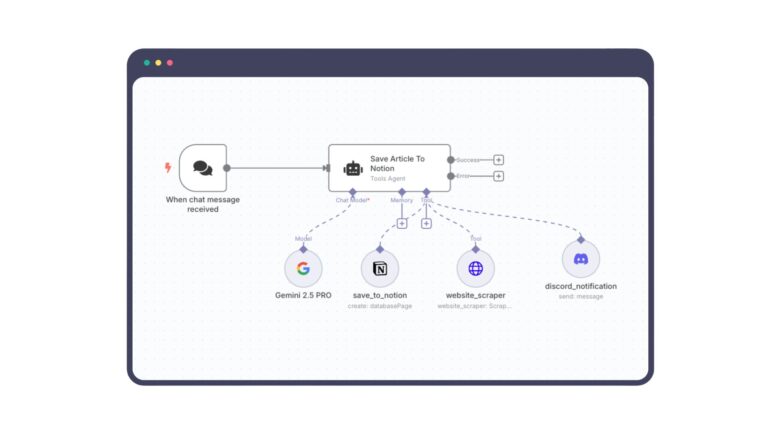

- Your IAM controls can’t manage AI agents acting with excessive autonomy.

And when something breaks, when the model outputs discriminatory results, exposes sensitive customer data, or takes an action that violates policy, you’re left with a familiar question and no playbook:

“Who approved this? Who owns the risk?”

AI Governance Isn’t a Nice-to-Have – It’s Your Foundation

AI isn’t just risky because it’s powerful. It’s risky because it’s unpredictable, opaque, and scalable. And that’s exactly why AI Governance needs to be built in and not bolted on.

Governance is what turns AI chaos into controlled capability.

It gives you:

- A map of what AI systems you actually have

- A framework to classify AI risks before they turn into breaches

- Visibility into where your data flows, and how it’s used

- Alignment with ISO 42001, NIST AI RMF, and regulatory requirements

This isn’t about slowing down innovation. It’s about enabling organisations for secure and reliable adoption of AI to make sure your innovation doesn’t blow up in production.

It’s Time to Rethink What “Secure” Means in AI

Securing AI isn’t about bolting old tools onto new systems. It’s about recognising that the definition of “secure” has shifted. You can’t govern AI with yesterday’s tools, you need governance frameworks purpose-built for adaptive, generative systems.

That means:

- Trustworthiness isn’t just marketing, it’s a measurable control objective.

- Fairness isn’t a principle, it’s a source of exposure.

- Explainability isn’t optional, it’s regulatory armour.

AI Governance doesn’t solve everything. But without it, nothing else holds. Not your security stack. Not your compliance program. Not your board narrative.

Final Word

If AI is already inside your business and it probably is, then you’re already accountable for it.

The question is: Are you governing it, or just hoping your old controls are enough?