Every now and then, a term sneaks into the AI conversation that sounds both mysterious and urgent. “Agentic AI” is one of them.

You’ve probably seen it in research papers, heard it on podcasts, or noticed investors tossing it around like the next frontier.

But ask five people what it actually means, and you’ll likely get five different answers.

So let’s unpack it. Not with academic jargon, but with clarity, curiosity, and a dose of real-world perspective.

Where Most AI Stops, Agents Begin

Let’s start with a definition: Agentic AI refers to systems that not only generate responses or predictions but can autonomously decide, plan, and act toward achieving goals.

Think of your typical AI model, let’s say a chatbot or an image classifier. It’s reactive. You give it a prompt or an image, and it gives you a response. Smart? Absolutely. But it doesn’t do anything on its own. It doesn’t plan, it doesn’t act, it doesn’t remember.

That’s where agentic AI enters.

An agentic AI isn’t just reactive, it’s proactive. It can:

- Set a goal

- Break it into subtasks

- Decide what tools it needs

- Execute actions across systems

- Adapt based on the outcome

Imagine a customer support agent that doesn’t just respond to tickets but triages issues, checks backend systems, escalates critical bugs, and updates users automatically.

In other words, it’s not just answering your question but it’s also figuring out what questions need asking in the first place, and then taking steps to answer them.

It’s Not Just One LLM Prompt. It’s a System.

Let’s clear up a common misconception: agentic AI is not just an LLM (like GPT-4) that chains a few calls together.

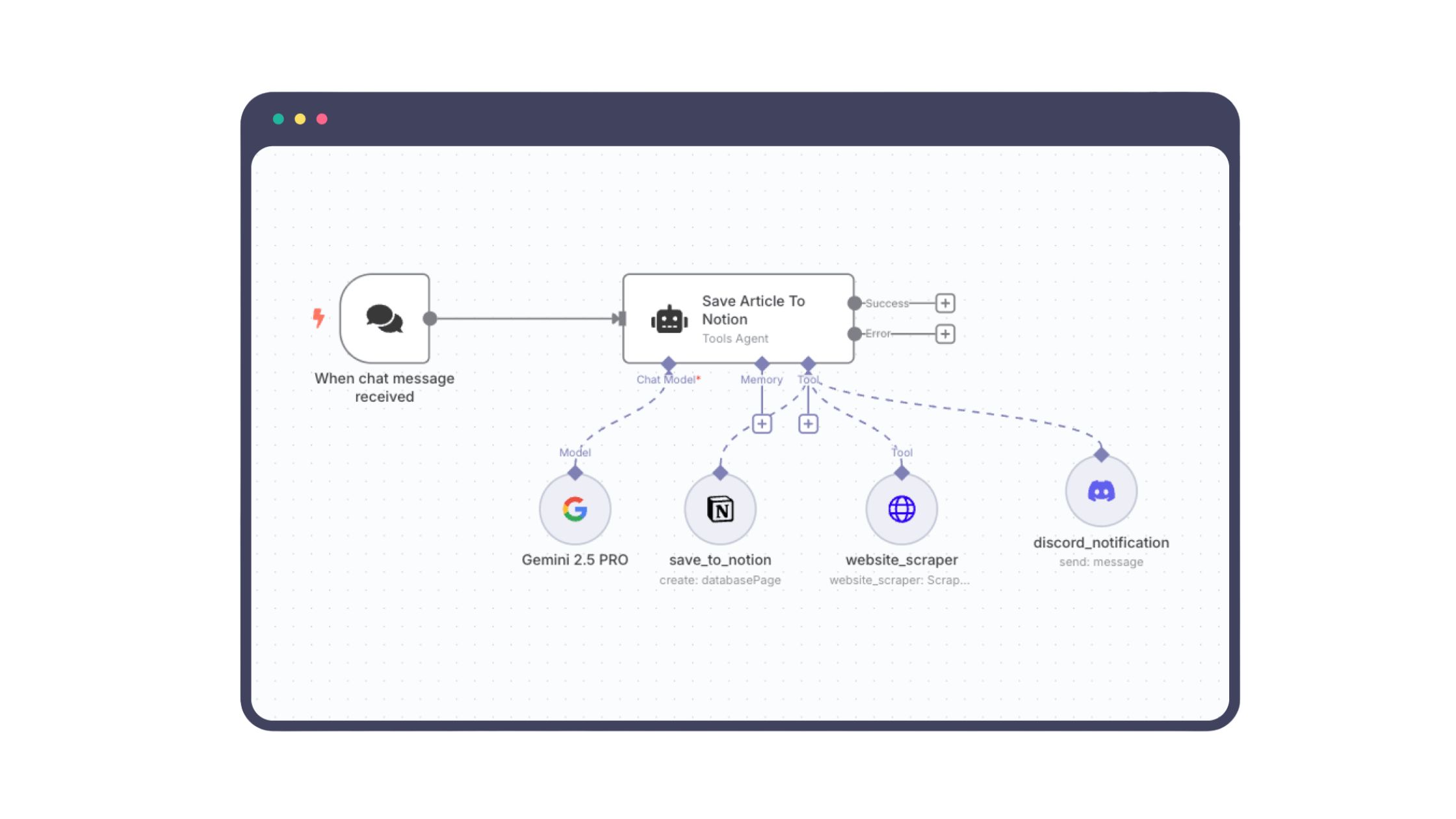

According to Anthropic’s recent work on agentic systems, true agents involve multiple moving parts:

- The LLM (or multiple LLMs): For language, reasoning, and decision-making

- A planner: To set goals, monitor progress, and revise strategy

- A memory component: To track context and results across steps

- Tool integration: APIs, browsers, code runners, databases, etc.

- An execution environment: Where actions are performed and observed

Imagine you’re using an agent to book a vacation:

It doesn’t just answer, “Here’s a flight.”

It checks your calendar, looks for deals, books hotels based on your preferences, compares car rentals, and notifies your team you’re OOO, all without you lifting a finger.

So Why Does It Matter?

Because the moment AI stops being just a tool and starts acting as an autonomous system, everything changes.

- Security: Now you’re not just securing inputs and outputs, you’re securing decision loops and execution steps.

- Trust: Can you trust that the agent will act ethically, safely, and in alignment with your intent?

- Governance: Who’s accountable when an agent goes off-script or causes unintended harm?

These aren’t hypothetical risks. We’re already seeing early agent-based systems misfire such as overbooking meetings, sending wrong emails, or making unexpected purchases.

And these are just the mild cases.

Final Thought: It’s Not Sci-Fi. It’s Already Here.

Agentic AI isn’t something for the next decade. It’s already powering research agents, customer service copilots, and workflow automation tools.

If LLMs gave us a leap in intelligence, agents are giving us a leap in autonomy.

And with autonomy comes responsibility.

Not just for what the system does but for how we build, guide, and govern it.

Understanding agentic AI isn’t just for researchers anymore. It’s becoming foundational knowledge for product teams, CISOs, regulators, and even users.

Because soon, we won’t just be using AI.

We’ll be working with it.