A deadline looms. An overworked employee turns to a popular AI chatbot to help write code or summarize a client brief. They paste in sensitive business data, nothing major just a shortcut to save time. Within seconds, that data is processed, retained, and potentially reused by the model in another context, with no clear trail or controls.

This isn’t a futuristic hypothetical, it has already happened in companies like Samsung. It’s a cautionary tale for every organization rushing to adopt AI without understanding the new terrain they’re stepping into. Traditional cybersecurity simply isn’t designed to handle the unique, invisible, and often irreversible risks introduced by large language models (LLMs).

Understanding the New Attack Surface

At first glance, LLMs like ChatGPT, Bard, and their counterparts seem harmless even revolutionary. They draft emails, generate code, and answer complex questions effortlessly. But beneath their convenience lies a hidden cybersecurity attack surface, one unlike anything traditional security teams have handled before.

Why? Because LLMs continuously learn from vast amounts of data often including user prompts creating inadvertent pathways for sensitive information leaks.

Key Vulnerabilities Unique to LLMs

Prompt Injection

Attackers exploit LLMs by injecting carefully crafted prompts designed to manipulate responses, enabling unauthorized access or exposing sensitive data. Unlike traditional software vulnerabilities, prompt injection is subtle and hard to detect, creating new challenges for security teams accustomed to conventional threats.

Insecure Output Handling

AI models often lack rigorous output validation, risking incorrect or dangerous results. When these unchecked outputs guide critical business decisions or automated systems, they introduce unforeseen vulnerabilities and operational risks.

Training Data Poisoning

Attackers can subtly manipulate AI training datasets, embedding misleading or harmful biases. This manipulation can degrade model accuracy, reliability, and trustworthiness, potentially affecting business-critical decisions.

Denial of Service (DoS)

AI systems require substantial computational resources. Attackers can exploit this by overwhelming models with excessively complex queries, leading to slowdowns, inflated costs, or complete system outages.

Supply Chain Vulnerabilities

Reliance on third-party AI providers introduces supply chain risks. Vulnerabilities in these external models or services can quickly escalate into broader security incidents, directly affecting your business.

Sensitive Data Leakage or Information Disclosure

Employees often unintentionally input sensitive information into AI models, unknowingly leaking proprietary or confidential data. Once embedded in external models, this information becomes nearly impossible to retrieve or control.

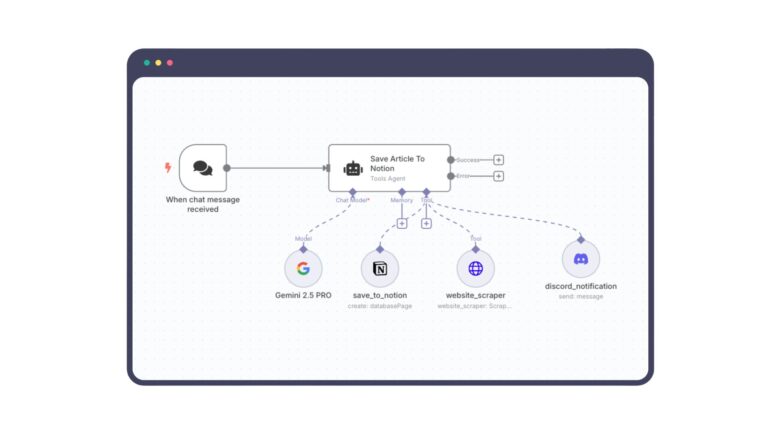

Insecure Plugin Design

Rapidly developed AI plugins or extensions, often bypassing strict security reviews, introduce additional vulnerabilities. These overlooked gaps can escalate attacks, exposing businesses to greater risk.

Excessive Agency

Providing AI models excessive autonomy can result in unintended actions, especially if guidance is ambiguous or misunderstood by the model, potentially causing operational disruptions or security incidents.

Overreliance

Dependence on AI-generated outputs without adequate human oversight can embed subtle errors into critical decision-making processes, resulting in strategic or operational missteps.

Model Theft

Attackers can systematically query AI models to reverse-engineer and replicate proprietary algorithms or trained data sets, risking significant intellectual property loss and competitive disadvantages.

Real-World Case Studies: Lessons Learned

Let’s revisit Samsung. In early 2023, employees accidentally leaked sensitive company information by inputting it into the ChatGPT chatbot. Similarly, Microsoft’s Bing chatbot raised eyebrows when certain conversations inadvertently revealed confidential information.

These real-world incidents emphasize that today’s cybersecurity programs must evolve to specifically address AI-driven vulnerabilities, as traditional safeguards simply won’t suffice.

Basic Recommendations for Immediate Action

- Awareness Training: Regularly educate employees on risks associated with AI.

- Simple Policies: Clearly define acceptable and unacceptable AI usage.

- Basic Validation: Ensure minimal validation procedures for AI outputs.

The Regulatory Angle: Implications for Businesses

Emerging global laws such as the EU AI Act, India’s DPDP Act, and various evolving U.S. regulations emphasize rigorous AI compliance. These frameworks don’t merely protect privacy, they specifically mandate the secure handling of AI-driven data. Is your business compliance-ready for this new wave of AI-specific regulations?

Conclusion and Call-to-Action

AI cybersecurity isn’t just a tech issue, it’s now a business imperative. Proactive, strategic handling of these vulnerabilities ensures innovation isn’t hampered by unforeseen breaches. Now is the moment to audit your AI governance and cybersecurity readiness potentially leveraging specialised guidance like a vCISO to navigate this critical juncture safely.

How prepared is your organization to defend against the hidden threats lurking beneath AI’s promising surface?