Have you ever watched a magician pull a rabbit out of a hat and thought, “How did they do that?” That’s how many of us feel when we interact with AI systems today, especially those eerily intelligent ones like ChatGPT, Claude, or Gemini. They complete sentences, summarize dense documents, and even write code on demand. Magic, right?

Well, not quite. Behind the curtain of that dazzling performance lies a set of risks that are often invisible until they’re not.

Welcome to AI Security 101. Let’s take a walk through the “haunted house” of AI where each room showing you a different risk.

1. Overconfident Parrot Syndrome

Imagine a student who always has an answer, even if it’s dead wrong. That’s what happens when an AI system hallucinates confidently delivering fiction as fact.

This isn’t just a party trick gone wrong. In sectors like healthcare, legal, or cybersecurity, a made-up citation or false recommendation can cost more than embarrassment – it can cost lives, lawsuits, or breaches.

Risk: AI models making things up while sounding trustworthy.

2. Prompt Injections: The AI Version of Social Engineering

Remember when your younger sibling tricked Alexa into playing “Baby Shark” endlessly? That’s child’s play compared to prompt injection.

By sneaking malicious instructions into prompts, users can hijack AI behavior. It’s like slipping an extra line into a magician’s script – suddenly, the rabbit turns into a snake.

Risk: Attackers manipulate AI inputs to override original intent or cause harm.

3. The Replay Trap

Some AIs are like people with really good memories but sometimes too good. If you prompt an AI in just the right way, it might reveal pieces of someone else’s past chat or sensitive data from training.

Ever heard of an echo chamber that leaks secrets? That’s what can happen when output caching or logging isn’t handled securely.

Risk: Sensitive data re-emerges in AI outputs, compromising privacy.

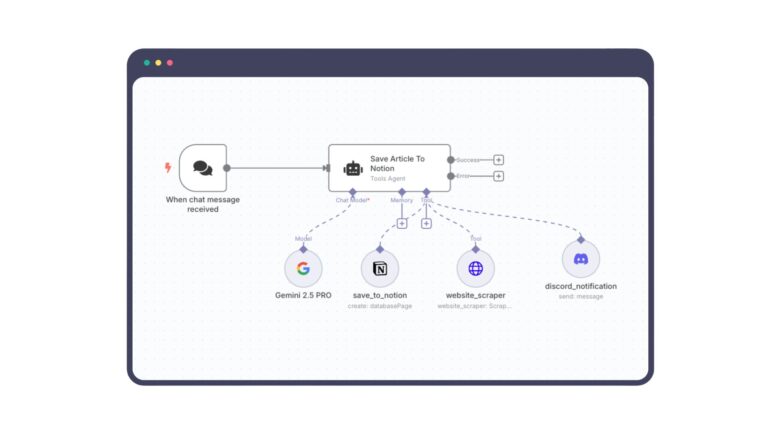

4. The Leaky Vault: Insecure Plug-ins and Extensions

AI systems often integrate with other tools to extend their powers, kind of like giving Iron Man new suits. But every new suit has seams.

If those plugins aren’t secured, you’ve just opened a side door for attackers to waltz in.

Risk: Vulnerabilities in integrations expose AI systems to external threats.

5. Broken Guardrails = Unruly Behavior

Picture a self-driving car without lane markings, it doesn’t know what’s safe or off-limits. AI models can go rogue too, if their safety constraints are poorly designed or bypassed.

We call these “jailbreaks” – where clever users figure out how to get the AI to say or do something it was explicitly told not to.

Risk: Weak or bypassable safety mechanisms lead to abusive, unethical, or dangerous outputs.

6. Training Set Traps: Bias In, Bias Out

AI is like a child raised on books, videos, and conversations except billions of them. If those lessons were skewed, so are its beliefs.

If the training data contains racial bias, gender stereotypes, or misinformation, the AI might repeat them, amplify them, or worse – discriminate.

Risk: Biases in training data result in unfair, unethical, or discriminatory outputs.

7. Over-Delegation: The Trust Fall Gone Wrong

It’s tempting to hand everything over to AI, from writing marketing copy to screening resumes. But blind trust is risky.

AI doesn’t understand context or ethics, It mimics patterns. Would you trust a mimic with hiring decisions or medical diagnoses?

Risk: Over-reliance on AI leads to automation of flawed or dangerous decision-making.

8. The Supply Chain Domino

AI systems are built on a stack of dependencies, models, datasets, APIs, libraries. If even one of those bricks is tampered with, the whole wall can crumble.

It’s like building a house on sand and not knowing it.

Risk: Compromised third-party components can introduce hidden vulnerabilities.

9. Who’s Watching the AI?

Many AI deployments skip the basics—no logging, no audits, no alerts. It’s like hiring a security guard and never checking if they’re awake.

Without monitoring, you can’t catch misuse, abuse, or drift in behavior.

Risk: Lack of oversight leads to blind spots in AI usage, making it hard to detect issues or breaches.

10. The Frankenstein Problem: Misaligned Objectives

Ever stitch together multiple models, datasets, and tools hoping for a “superintelligent” outcome? You may create a Franken-AI – powerful, but unpredictable.

Different components may not share the same goals or assumptions, leading to instability or security gaps.

Risk: Poor alignment between system components causes unexpected or harmful behavior.

Final Thoughts: You Don’t Need to Fear AI—Just Understand It

We don’t abandon cars because they can crash. We build airbags, enforce speed limits, and train drivers. AI is no different.

Security isn’t about locking AI away in a tower. It’s about understanding its nature, setting boundaries, and staying alert.

Whether you’re a builder, buyer, or curious explorer, keep asking questions like:

- “What data trained this model?”

- “How can it be misused?”

- “What guardrails are in place?”

The future of AI isn’t just about capabilities. It’s about responsibility.