Back in the day, my biggest headache was someone plugging an unauthorized router under their desk. Today, my headache wears a halo, writes fluent code, sounds like Morgan Freeman and no one knows who gave it access to the production database.

That’s AI for you. A marvel. A menace. And a magnificent reason to lose sleep.

Let’s be honest: most of us in cybersecurity didn’t sign up for this. We came to secure systems, networks and assets with an IP address. But AI? AI doesn’t play by our rules, it writes new ones.

So now we’re here. In a world where your firewall logs are clean, but your AI just made a legally binding decision, violated three privacy laws, and hallucinated a fake invoice.

Let’s talk governance.

First, a Personal Rule: Never Trust a Model That Smiles Too Much

I’ve reviewed more security programs than I care to count. But here’s a pattern, when a company starts experimenting with AI, governance is either absent or an afterthought. Why? Because everyone’s too busy playing with the magic.

- “Look, it rewrote our entire sales pitch!”

- “Hey, it diagnosed that X-ray better than the intern!”

- “What if we replaced our onboarding team with a chatbot?”

Fine. Impressive. But here’s what they’re not asking:

- Where’s the model pulling its data from?

- Is it leaking customer PII in the autocomplete?

- Can it be jailbroken with clever prompting?

- What happens when it goes off-script?

And that’s where Gartner’s AI Governance framework AI Trust, Risk, and Security Management starts making sense.

AITRiSM Isn’t a Framework. It’s a Reality Check.

Most frameworks are spreadsheets with a PR team. AiTRISM, thankfully, feels like it was written by someone who’s been in the trenches. It speaks to those of us who’ve had to explain to a board why their million-dollar model said something offensive in a product demo.

Let’s break it down, security-vet style.

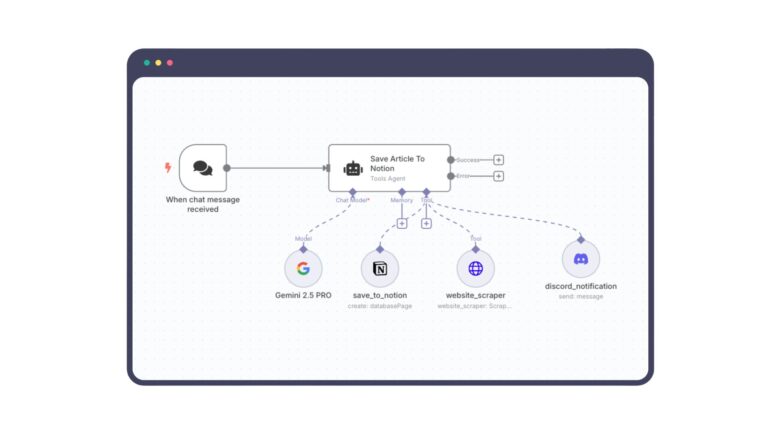

ModelOps: Your CI/CD Pipeline Just Got Weirder

Back in software land, we had dev, test, stage, prod and life was good. Now? You have models that:

- Change behavior after retraining

- Perform worse in real-time than in test environments

- Pick up bad habits from user inputs like a moody teenager

Without ModelOps tracking drift, versioning models, enforcing lineage you’re essentially letting a black box make business decisions, unmonitored and unquestioned. That’s not innovation, that is gambling with someone else’s money.

AI Security: Welcome to the Funhouse

The AI threat landscape isn’t just new , it’s absurd.

- Prompt injection feels like SQLi’s weird cousin who writes poetry.

- Data poisoning turns your AI into a misinformation machine.

- Model inversion lets attackers reconstruct your training data — great if you’re nostalgic about data breaches.

Your traditional vulnerability scanner? Useless. It doesn’t speak TensorFlow. And pen testers? They’re still figuring out how to break a chatbot without sounding like they’re roleplaying.

Securing AI isn’t just plugging holes. It’s learning an entirely new language and realizing half the dictionary hasn’t been written yet.

Explainability: “Why Did the Model Do That?”

My least favorite question. Because most of the time, the answer is: “We’re not sure.”

Even with tools like SHAP or LIME, explainability is fuzzy. Especially with LLMs. These things don’t reason, they predict. They don’t understand instead they approximate.

And yet, regulators, customers, and auditors all want answers.

“Why was this loan denied?”

“Why did your model flag this email as insider threat?”

“Why did the AI recommend firing the employee?”

Good luck explaining cosine similarity in a courtroom.

Bias and Ethics: The Landmines No One Wants to Touch

AI is trained on history and history is biased. So your model? Biased by design.

I’ve seen facial recognition fail on darker skin tones. I’ve seen hiring algorithms quietly filter out maternity gaps not because anyone intended harm but because no one questioned the training data.

Bias isn’t just an ethical issue, it’s a liability. In the age of global AI regulation, an “oops” moment can cost you millions or your reputation.

Governance means saying: “No, we won’t deploy this until we know what it’s learning.” And that’s a tough sell to leadership when the model’s performance graph looks like a hockey stick.

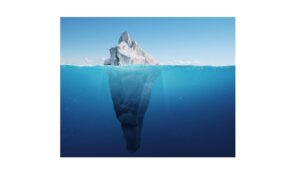

Privacy and Compliance: When Shadow AI Bites Back

Here’s a scenario I see too often:

Your team is sending client data to a public LLM API to draft emails faster. No one filed a DPIA and no one vetted the terms of service. The model learns, remembers and the data? Gone to the ether.

This isn’t just a DLP problem, this is a shadow governance crisis. AiTRISM insists on visibility, on consent and on boundaries.

Because if your AI pipeline doesn’t align with your privacy policies, you’re not just non-compliant but vulnerable too.

So Where Do We Go From Here?

Let me give it to you straight:

- AI is not secure by default.

- It does not come with ethical guardrails out of the box.

- And it definitely doesn’t stop to ask: “Should I be doing this?”

That’s our job. And governance real, mature, cross-functional governance is the only way we scale AI safely. Not just for compliance, not just for optics but for resilience.

My Closing Thought

I’ve been in security long enough to know we rarely get second chances with risk. AI is giving us a first chance right now to build thoughtfully, govern intentionally, and admit we don’t know all the answers yet.

And we’d be fools not to use it before walking deeper into the AI woods.